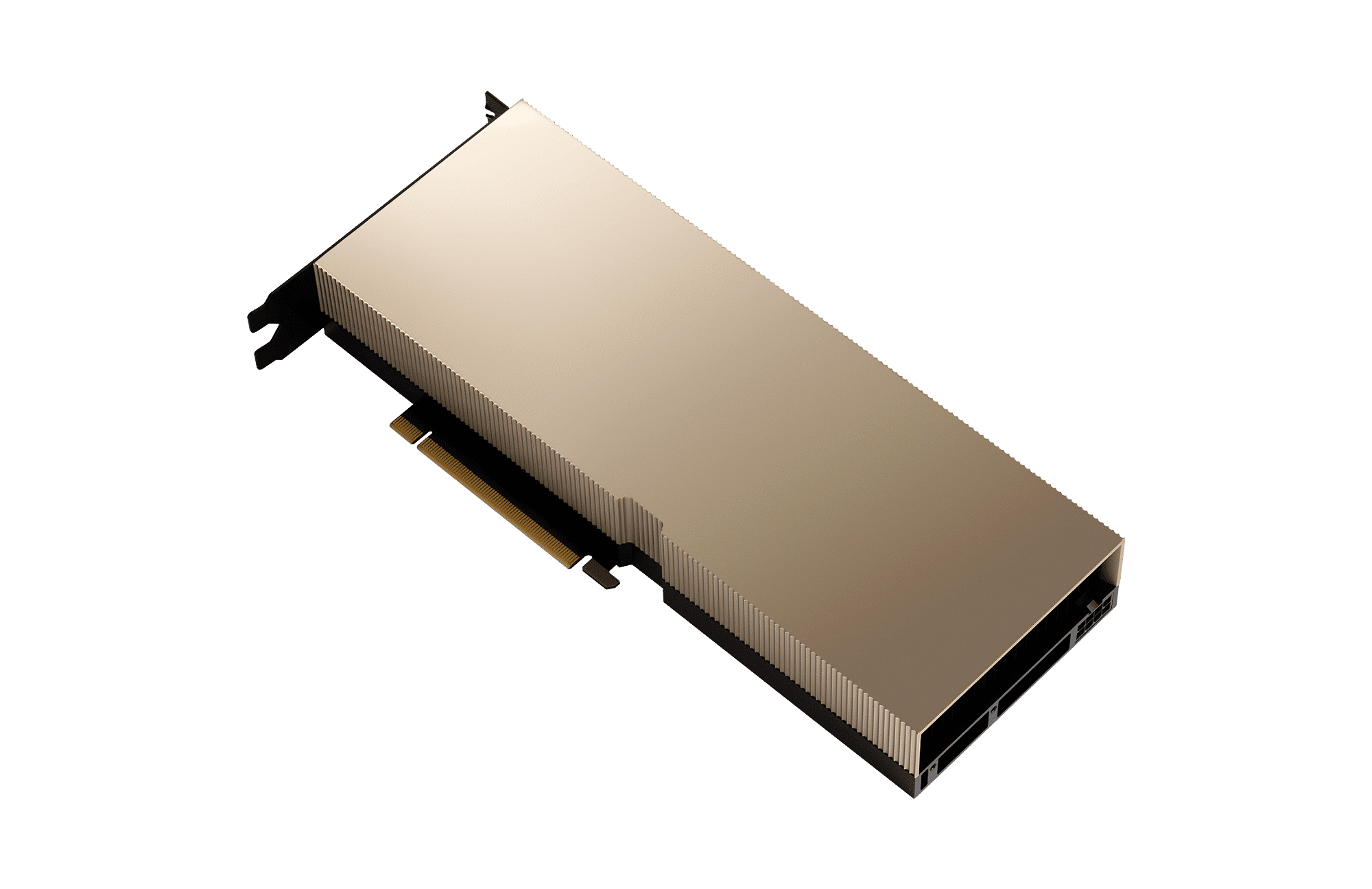

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration for AI, data analytics, and high-performance computing (HPC) at every scale. Powering the world's highest-performing elastic data centers, the A100 offers up to 20x higher performance compared to the previous generation.

| Architecture | Ampere |

| FP64 | 9.7 TFLOPS |

| FP64 Tensor Core | 19.5 TFLOPs |

| FP32 | 19.5 TFLOPs |

| Tensor Flot 32 (TF32) | 156 TFLOPs | 312 TFLOPS* |

| BFLOAT16 Tensor Core | 312 TFLOPs | 624 TFLOPS* |

| FP16 Tensor Core | 312 TFLOPs | 624 TFLOPS* |

| INT8 Tensor Core | 624 TFLOPS | 1248 TFLOPS* |

| GPU Memory | 80GB HBM2e |

| GPU Memory Bandwidth | 1,935GB/s |

| Max Thermal Design Power (TDP) | 300W |

| Multi-Instance GPU | Up to 7 MIGs @ 10GB |

| Form Factor | PCIe |

| Interconnect | NVIDIA NVLink Bridge for up to 2 GPUs: 600GB/s |

| PCIe Gen4: 64GB/s | |

| Server Options | Partner and NVIDIA-certified Systems with 1-8 GPUs |

| *with sparsity |